Question

Question: If the angular distance between the stars turns out to be approximately \[1100\] arc seconds, or \[0...

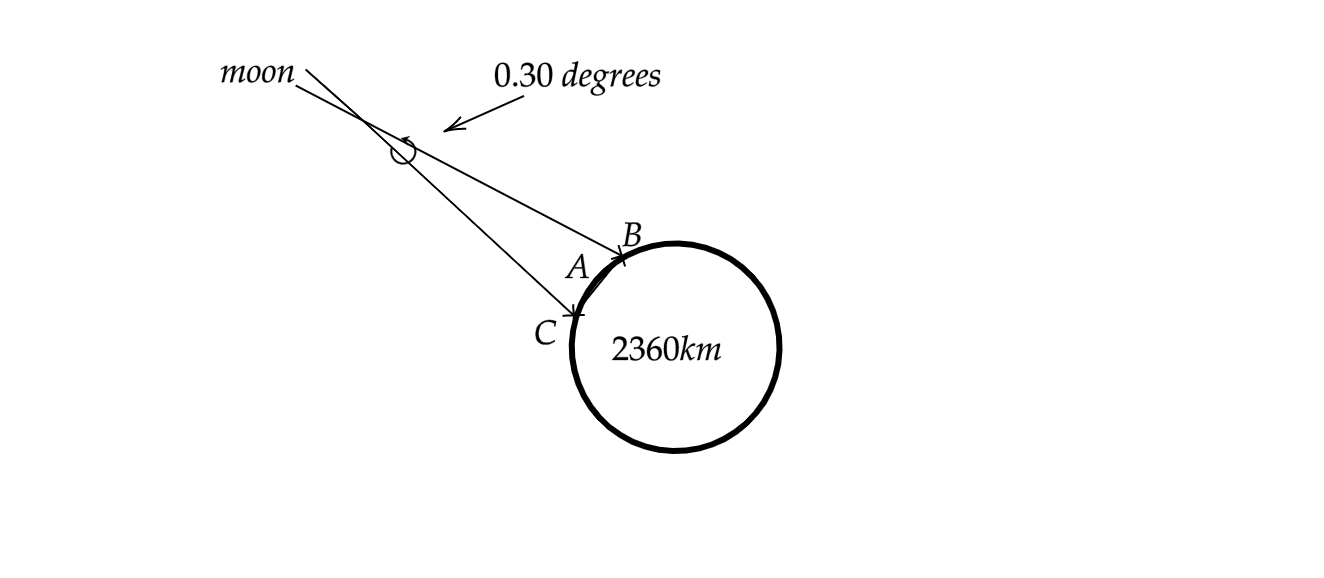

If the angular distance between the stars turns out to be approximately 1100 arc seconds, or 0.30 degrees. The moon appears to shift 0.3 degrees when we observe it from two vantage points 2360km apart, then find the distance of the moon from the surface of the earth. Given the angular diameter of the moon is 0.5 degrees.

A) 450642km

B) 450392km

C) 325684km

D) 480264km

Solution

The phenomenon mentioned in the given question is parallax. Parallax is the apparent angular displacement of a celestial body due to its being observed from the surface of the earth instead of the centre of the earth. Parallax also arises due to a change in viewpoint caused by relative motion.

Complete step by step solution:

From the given question, we can say that the shift in the angular distance of the moon when viewed from two different vantage points is 0.3 degrees. Let this angular shift be θ.

Since angular measurements are usually made in radians, we must convert the given angular distance into radians.