Question

Question: Example 2.2.1 i) Find contingency table ii) Find recall iii) Precision iv) Negative recall v) False ...

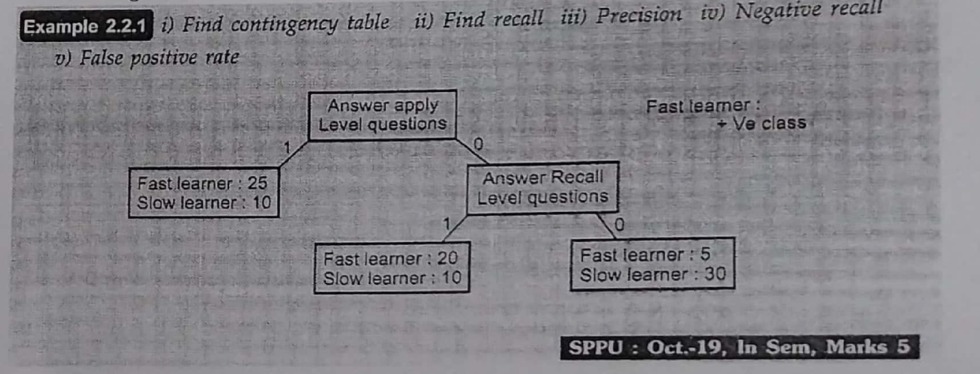

Example 2.2.1 i) Find contingency table ii) Find recall iii) Precision iv) Negative recall v) False positive rate

i) Contingency table:

| Predicted Fast learner | Predicted Slow learner | |

|---|---|---|

| Actual Fast learner | 45 | 5 |

| Actual Slow learner | 20 | 30 |

ii) Recall = 0.9 iii) Precision ≈ 0.6923 iv) Negative Recall = 0.6 v) False Positive Rate = 0.4

Solution

To solve this problem, we first need to identify the True Positives (TP), True Negatives (TN), False Positives (FP), and False Negatives (FN) from the given decision tree. The problem states that "Fast learner" is the positive class. Therefore, "Slow learner" is the negative class.

A decision tree predicts the majority class for instances reaching a leaf node.

Let's analyze each leaf node:

1. Left Leaf Node:

- "Fast learner: 25"

- "Slow learner: 10"

The majority class is "Fast learner" (25 > 10). So, the model predicts "Fast learner" for these instances.

- True Positives (TP): Actual "Fast learner" correctly predicted as "Fast learner" = 25

- False Positives (FP): Actual "Slow learner" incorrectly predicted as "Fast learner" = 10

2. Middle-Right Leaf Node:

- "Fast learner: 20"

- "Slow learner: 10"

The majority class is "Fast learner" (20 > 10). So, the model predicts "Fast learner" for these instances.

- True Positives (TP): Actual "Fast learner" correctly predicted as "Fast learner" = 20

- False Positives (FP): Actual "Slow learner" incorrectly predicted as "Fast learner" = 10

3. Rightmost Leaf Node:

- "Fast learner: 5"

- "Slow learner: 30"

The majority class is "Slow learner" (30 > 5). So, the model predicts "Slow learner" for these instances.

- False Negatives (FN): Actual "Fast learner" incorrectly predicted as "Slow learner" = 5

- True Negatives (TN): Actual "Slow learner" correctly predicted as "Slow learner" = 30

Now, aggregate the counts:

- Total True Positives (TP) = 25 (from Leaf 1) + 20 (from Leaf 2) = 45

- Total False Positives (FP) = 10 (from Leaf 1) + 10 (from Leaf 2) = 20

- Total False Negatives (FN) = 5 (from Leaf 3) = 5

- Total True Negatives (TN) = 30 (from Leaf 3) = 30

Total number of instances = TP + FP + FN + TN = 45 + 20 + 5 + 30 = 100

Now, we can calculate the required metrics:

i) Contingency Table (Confusion Matrix): The contingency table summarizes the performance of the classification model.

| Predicted Fast learner (Positive) | Predicted Slow learner (Negative) | Total Actual | |

|---|---|---|---|

| Actual Fast learner (Positive) | 45 (TP) | 5 (FN) | 50 |

| Actual Slow learner (Negative) | 20 (FP) | 30 (TN) | 50 |

| Total Predicted | 65 | 35 | 100 |

ii) Recall (True Positive Rate or Sensitivity): Recall measures the proportion of actual positive cases that were correctly identified by the model. Recall=TP+FNTP=45+545=5045=0.9

iii) Precision (Positive Predictive Value): Precision measures the proportion of positive predictions that were actually correct. Precision=TP+FPTP=45+2045=6545≈0.6923

iv) Negative Recall (True Negative Rate or Specificity): Negative Recall measures the proportion of actual negative cases that were correctly identified by the model. Negative Recall=TN+FPTN=30+2030=5030=0.6

v) False Positive Rate (FPR): FPR measures the proportion of actual negative cases that were incorrectly identified as positive. False Positive Rate=FP+TNFP=20+3020=5020=0.4

Explanation of the solution:

- Identify TP, FP, FN, TN: From each leaf node of the decision tree, determine the predicted class (which is the majority class in that node). Then, based on the actual counts of "Fast learner" (positive class) and "Slow learner" (negative class) within that node, categorize them into True Positives (TP), False Positives (FP), False Negatives (FN), and True Negatives (TN).

- For nodes predicting "Fast learner": Actual "Fast learner" are TP, Actual "Slow learner" are FP.

- For nodes predicting "Slow learner": Actual "Fast learner" are FN, Actual "Slow learner" are TN.

- Aggregate Counts: Sum up the TP, FP, FN, and TN values from all leaf nodes.

- TP = 25 + 20 = 45

- FP = 10 + 10 = 20

- FN = 5

- TN = 30

- Calculate Metrics: Apply the standard formulas for Contingency Table, Recall, Precision, Negative Recall, and False Positive Rate using the aggregated counts.